Kafka

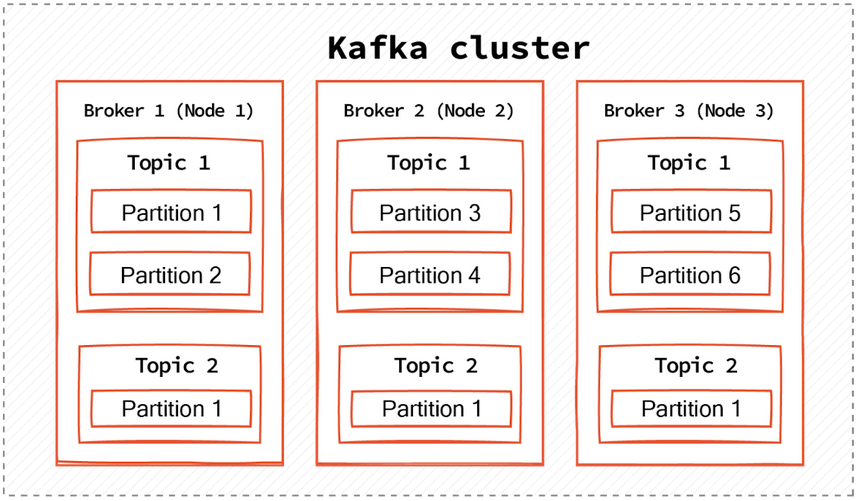

A Kafka server, a Kafka broker and a Kafka node all refer to the same concept and are synonyms.

A Kafka broker hosts topics.

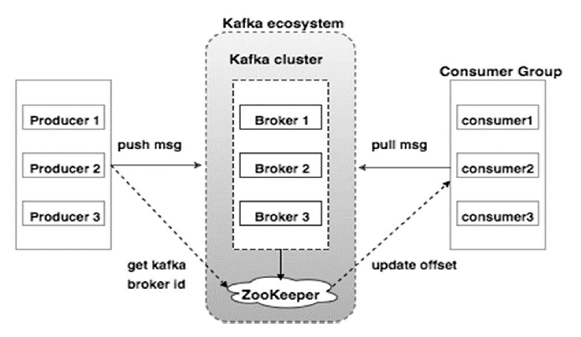

A Kafka cluster is a group of Kafka brokers (servers) that work together to handle the incoming and outgoing data streams for a Kafka system.

Kafka Cluster

Kafka Broker

Kafka Topic

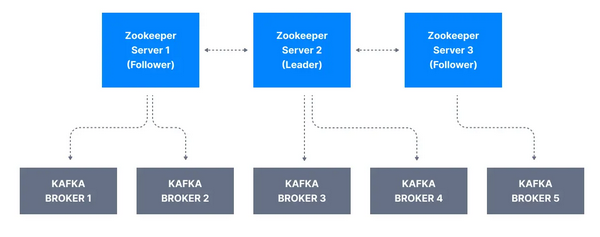

Zookeeper

Zookeeper has a leader to handle writes, the rest of the servers are followers to handle reads.

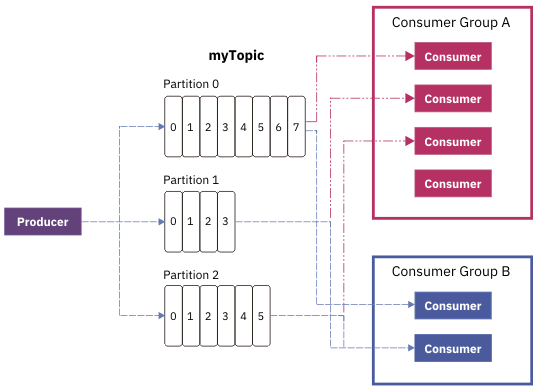

When one partition leader crashes, Kafka chooses another broker as the new partition leader. Then, the consumers and producers also have to switch to the new leader.

bootstrap-server

Whenever we implement Kafka producers or consumers, one of the things we need to configure is a “bootstrap.servers” property.

Producer:

Properties config = new Properties();

config.put("client.id", InetAddress.getLocalHost().getHostName());

config.put("bootstrap.servers", "host1:9092,host2:9092");

config.put("acks", "all");

new KafkaProducer<K, V>(config);

Consumer:

Properties config = new Properties();

config.put("client.id", InetAddress.getLocalHost().getHostName());

config.put("group.id", "foo");

config.put("bootstrap.servers", "host1:9092,host2:9092");

new KafkaConsumer<K, V>(config);

The bootstrap-servers configuration is a list of hostname:port pairs that address one or more (even all) of the brokers. The client uses this list by doing these steps:

- pick the first broker from the list

- send a request to the broker to fetch the cluster metadata containing information about topics, partitions, and the leader brokers for each partition (each broker can provide this metadata)

- connect to the leader broker for the chosen partition of the topic

Sample

Let’s assume we use a simple Docker image with Kafka and Kraft. We can install this Docker image with this command:

docker run -p 9092:9092 -d bashj79/kafka-kraft

This runs a single Kafka instance available at port 9092 within the container and on the host.

Within a Java application, we can use the Kafka client:

static Consumer<Long, String> createConsumer() {

final Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,

"localhost:9092,another-host.com:29092");

props.put(ConsumerConfig.GROUP_ID_CONFIG,

"MySampleConsumer");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,

LongDeserializer.class.getName());

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,

StringDeserializer.class.getName());

// Create the consumer using props.

final Consumer<Long, String> consumer = new KafkaConsumer<>(props);

// Subscribe to the topic.

consumer.subscribe(Arrays.asList("samples"));

return consumer;

}

📄️ Confluent CLI

Install Confluent CLI

📄️ Schema Registry

Definition and Purpose

🗃️ Examples

9 items

📄️ References

Apache Kafka